- #Python free memory install

- #Python free memory generator

- #Python free memory software

- #Python free memory free

A simple way to erase the objects that are not referenced is by using a garbage collector or gc statement.

#Python free memory free

There is another useful technique that can be used to free memory while we are working on a large number of objects. This kind of reading would allow us to process large data in a limited size without using up the system memory completely.Ĭode: def readbits(filename, mode="r", chunk_size=20):įilename = "C://Users//Balaji//Desktop//Test"

#Python free memory generator

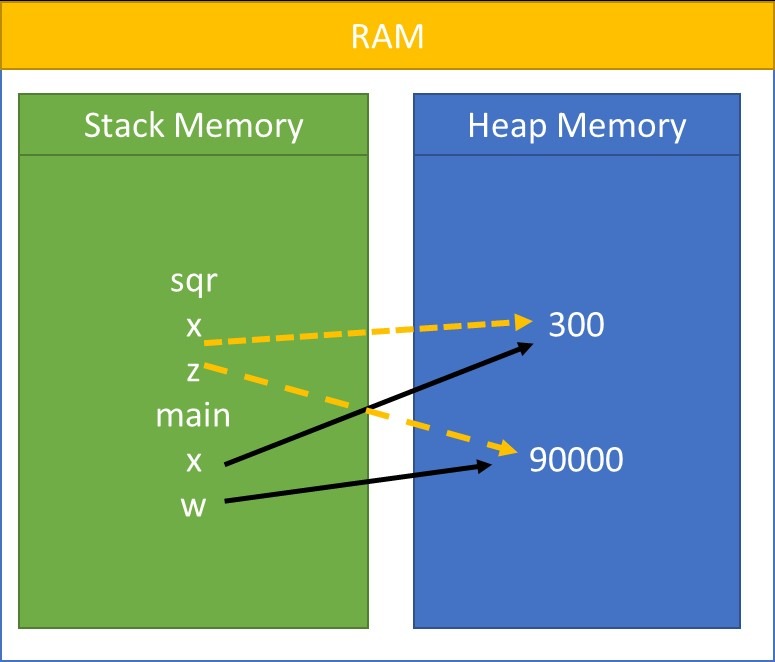

In the sample code given below, we have tried to read a large dataset into small bits using the generator function. This allows us to iter over one single value at a time instead of passing the entire set of integers. In this sample generator function, we have generated integers using the function sample generator, which is assigned to the variable gen_integ, and then the variable is iterated. The generator function has a special characteristic from other functions where a statement called yield is used in place of the traditional return statement that returns the output of the function.Ī sample Generator function is given as an example: This is where the generator comes in handy it does not allow the complete dataset to loop over since it causes a Memory Error and terminates the program. Writing a normal iterator function in python loops the entire dataset and iters over it. Iterators can be used to loop the data over.

Generators are functions that are used to return an iterator. Generators are very useful in working on big projects where we have to work with a large volume of data. Generators allow us to efficiently use the large datasets into many segments without loading the complete dataset. It can be used as a user-defined function that can be used when working with big datasets. We can overcome such problems by executing Generator functions. Upon working on Machine Learning problems, we often come across large datasets which, upon executing an ML algorithm for classification or clustering, the computer memory will instantly run out of memory. The most important case for Memory Error in python is one that occurs during the use of large datasets. Upon working, the computer stores all the data and uses up the memory throws a Memory Error.

#Python free memory install

In such cases, we can use the conda install command in python prompt and install those packages to fix the Memory Error.Īnother type of Memory Error occurs when the memory manager has used the Hard disk space of our system to store the data that exceeds the RAM capacity. When you are installing different python packages using the pip command or other commands may lead to improper installation and throws a Memory Error. In such cases, you can go uninstall 32-bit python from your system and install the 64-bit from the Anaconda website. This is due to the Python version you might be using some times 32-bit will not work if your system is adopted to a 64-bit version. Sometimes even if your RAM size is large enough to handle the datasets, you will get a Memory Error. Different types of Memory Error occur during python programming.

When working on Machine Learning algorithms most of the large datasets seems to create Memory Error.

Most often, Memory Error occurs when the program creates any number of objects, and the memory of the RAM runs out. The numpy operation of generating random numbers with a range from a low value of 1 and highest of 10 and a size of 10000 to 100000 will throw us a Memory Error since the RAM could not support the generation of that large volume of data. Np.random.uniform(low=1,high=10,size=(10000,100000))įor the same function, let us see the Name Error.

#Python free memory software

Web development, programming languages, Software testing & othersĬode: # Numpy operation which return random unique values Start Your Free Software Development Course

0 kommentar(er)

0 kommentar(er)